We all know that working with data can be a bit of a chore. But what if you could skip the repetitive tasks and jump straight into analysis? That’s where Tableau Published Data Sources come in. Rather than each person in your organization creating their own data sources from scratch, a published data source allows you to create it once, upload it to Tableau Server or Tableau Online, and make it available to everyone with just a few clicks.

“A picture is worth a thousand words, but a data visualization is worth a thousand charts.”

– Edward Tufte, Statistician and Professor Emeritus

But what exactly does that mean, and why is it essential to your organization? Let’s break it down.

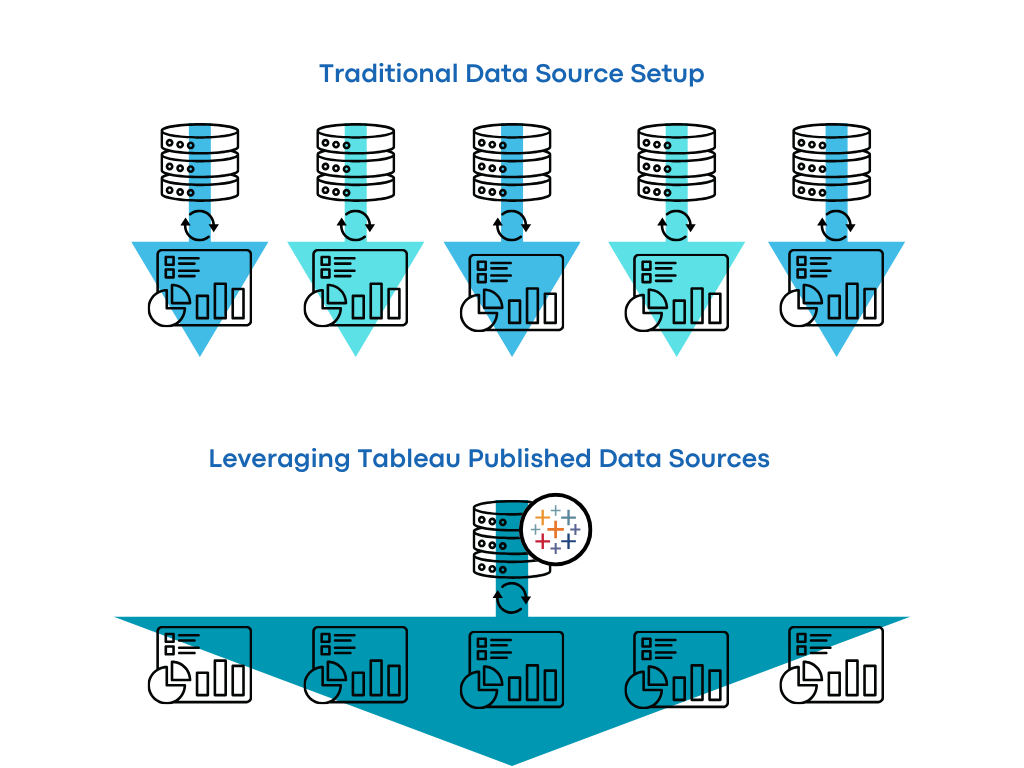

How Published Data Sources Work

A Published Data Source is essentially a pre-defined, reliable data set that’s uploaded to Tableau Server or Tableau Online. Once it’s published, anyone in your organization can connect to it. This eliminates the need to rebuild the same data set over and over again.

Instead of each user performing the same tasks (i.e. writing complex queries), you only need to do this once. And because it’s accessible to everyone, you save time, avoid errors, and ensure consistency across the board. Plus, you can schedule regular data refreshes, so everyone is always working with the most up-to-date information.

The Benefits of Implementing Published Data Sources: Time Savings

Since we’re all busy people, let’s start with the time-saving benefits. With Published Data Sources, the need for redundant work is greatly reduced. Here’s how:

- Save Time on Data Prep: Instead of each analyst recreating the same data transformations, cleaning processes, and complex queries, users can connect to a pre-built, reliable data source. This significantly reduces the amount of time spent on data wrangling, ETL work, and writing calculations. Analysts can jump straight into analysis and dashboard creation. This means no more wasting precious time just getting the data into shape.

- Simplified Maintenance: Once a data source is published, it is the responsibility of the data source owner to maintain it. This means users can focus on analysis while the owner handles updates, refreshes, and any data adjustments. If calculations break, or if data changes, it’s up to the data source owner to resolve. This results in less time spent on maintenance for other users, streamlining the entire process.

- Efficiency for Server Teams: The more dashboards and users that rely on a published data source, the fewer data sources the server team has to manage. Instead of handling multiple duplicate data sources, the server team can focus on a smaller number of published data sources. This leads to a more efficient server environment, requiring fewer resources to manage, refresh, and maintain the data.

Reduced Resource Requirements: Using shared published data sources also minimizes the technology footprint on Tableau Server. Every time a user leverages an existing data source rather than creating their own, it results in storage and refresh savings. Moreover, “Gold” published data sources (these are trusted, validated, and optimized data sources that meet the highest standards for accuracy and performance) are optimized for performance, ensuring that resources are used as efficiently as possible.

“Published data sources in Tableau serve as the backbone of a mature analytics environment, turning raw data into consistent, trusted insights that empower organizations to make informed decisions, faster and more confidently.”

– Steve Lopez President & CEO Next Level Analytics

The Benefits of Implementing Published Data Sources: Reduction of Complexity

Now that we’ve covered time savings, let’s dive into how Published Data Sources help reduce complexity across the board.

- Reliable and Coordinated Data: With Gold-standard published data sources, the data is not only validated and optimized for performance, but it is also refreshed on a coordinated cycle at the server level. This ensures that all users work with the same accurate, up-to-date data. By validating the data through third-party checks and incorporating pre-built calculations, you reduce the chance for discrepancies and errors. The unified refresh cycle eliminates the need for constant validation, providing your organization with a single source of truth.

- Data Cataloging and Documentation: Published data sources are extensively cataloged and documented, detailing how different calculations, fields, and data sources are defined and created. This allows users to understand the data’s origins and structure. New users are empowered to dive straight into analysis, knowing exactly where to find the data. Additionally, this documentation helps users trace the data back to its source and confirm its relevance, especially when answering business questions.

- Eliminate Bottlenecks and Faster Adoption: In a traditional setup, analysts often spend significant time preparing data. With Published Data Sources, the data preparation is a one-time task managed by the data source owner. This means analysts can get straight to the work that matters—analysis and insights. For new Tableau users, they can immediately access curated Published Data Sources, eliminating the steep learning curve. They can start finding insights within seconds, speeding up adoption across the team.

- Cost and Maintenance Savings: Managing a single Published Data Source that serves multiple users is far more cost-effective than managing multiple individual data sources. With fewer data sources to maintain, you reduce storage and refresh costs. This allows you to streamline resources and reduce the technology footprint on your server. The “Gold” published data sources, being optimized for performance, also help reduce the resources needed for refreshes and ensure efficient use of server resources.

Read Project 3’s Case Study: Real-World Impact: How a COE Transforms Tableau Adoption

How to Implement Published Data Sources

To fully realize the potential of Published Data Sources, your Tableau Center of Excellence (COE) plays a key role. Here’s how the process works:

- Governance: The COE establishes clear guidelines for creating and submitting new published data sources. They are responsible for ensuring that the data is validated and meets organizational standards. Additionally, the COE checks for duplications to confirm whether a new data source is genuinely unique or if it’s duplicative of an existing one. This step helps maintain consistency and ensures that only high-quality data is being used.

- Optimization & Performance: The COE optimizes queries, calculations, and refresh schedules to ensure that published data sources are performing at their best. This includes tuning data sources for speed and efficiency, ensuring quick access and minimal load on the system. Regularly scheduled refreshes keep the data current without putting unnecessary strain on the server.

- Change Control: As data evolves, updates to published data sources will inevitably be required. The COE coordinates any changes, ensuring that they are communicated clearly with the community. Whether it’s a new calculation, an update to the data structure, or other necessary modifications, the COE ensures that the changes are handled systematically without disrupting users’ workflows.

- Cataloging & Monitoring: All Gold published data sources are thoroughly cataloged, so every user can see exactly what’s included, how data is calculated, and the source of the information. This comprehensive cataloging process ensures that users know exactly what they’re working with. Additionally, the COE continuously monitors the performance and usage of these data sources to identify any potential issues and maintain smooth operation.

By putting this structure in place, your Tableau Center of Excellence ensures that Published Data Sources are effectively governed, optimized, and maintained, allowing your organization to fully leverage them for consistent, high-quality analysis.

Conclusion

By implementing Tableau Published Data Sources, your team can save time, eliminate redundancy, and ensure a smooth workflow for everyone. With better collaboration, lower maintenance, and improved resource efficiency, this simple but powerful feature will transform the way your organization works with data.

Ready to make the switch? Centralizing your data into Published Data Sources will have your team working faster, smarter, and more efficiently—less data wrangling, more insight. And here’s the best part: Project 3 Consulting and Next Level Analytics are here to help! We specialize in maximizing Tableau’s potential by ensuring your infrastructure, governance, and user enablement strategies work seamlessly together to drive meaningful impact.

Contact us today to learn how we can transform your analytics environment and help you make data-driven decisions that truly move the needle.